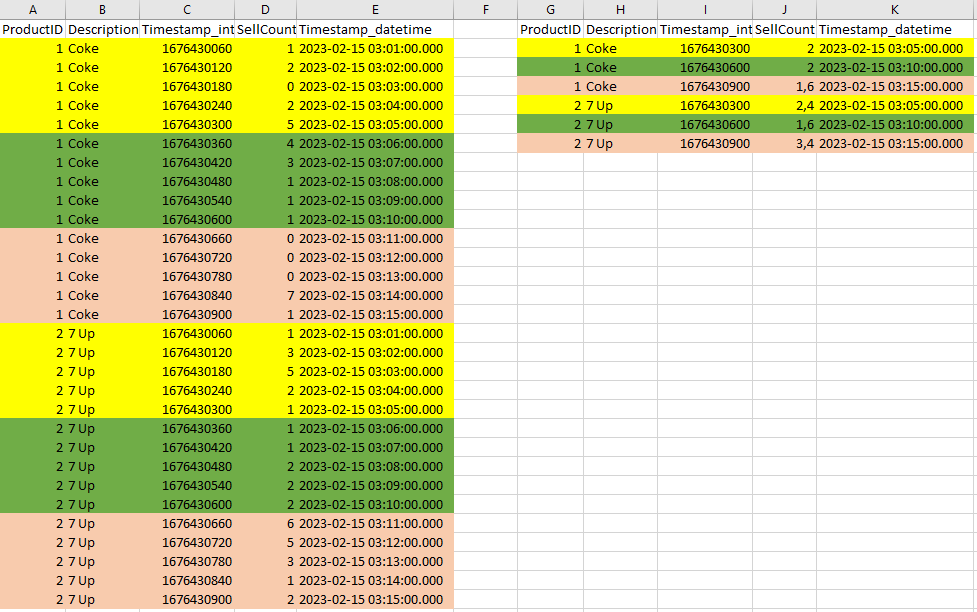

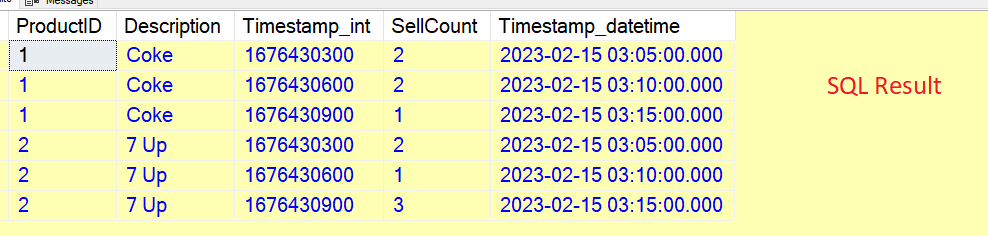

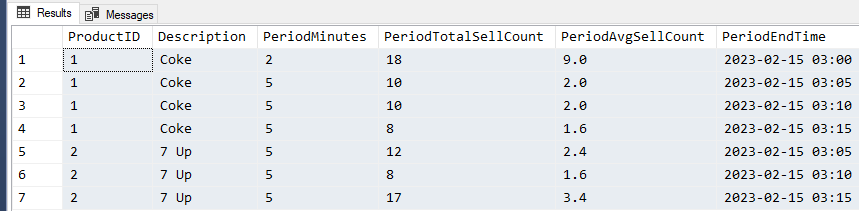

I have a dataset that has a date column every 1 minute. I wanted to group the information to have the data "organized" every 5 minutes and average the SellCount column.

For example, I have 5 records, 1 for minute 1, another for minute 2, another for minute 3, another for minute 4 and finally another for minute 5. I want to group in a single line where the date is present will be the 5th minute.

I send the code to create the table and insert the data.

CREATE TABLE SELL (ProductID int,Description varchar(10),Timestamp_int int, SellCount int,Timestamp_datetime datetime)

INSERT INTO SELL VALUES(1,'Coke',1676430060,1,'2023-02-15 03:01:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430120,2,'2023-02-15 03:02:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430180,0,'2023-02-15 03:03:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430240,2,'2023-02-15 03:04:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430300,5,'2023-02-15 03:05:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430360,4,'2023-02-15 03:06:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430420,3,'2023-02-15 03:07:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430480,1,'2023-02-15 03:08:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430540,1,'2023-02-15 03:09:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430600,1,'2023-02-15 03:10:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430660,0,'2023-02-15 03:11:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430720,0,'2023-02-15 03:12:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430780,0,'2023-02-15 03:13:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430840,7,'2023-02-15 03:14:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430900,1,'2023-02-15 03:15:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430060,1,'2023-02-15 03:01:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430120,3,'2023-02-15 03:02:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430180,5,'2023-02-15 03:03:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430240,2,'2023-02-15 03:04:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430300,1,'2023-02-15 03:05:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430360,1,'2023-02-15 03:06:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430420,1,'2023-02-15 03:07:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430480,2,'2023-02-15 03:08:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430540,2,'2023-02-15 03:09:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430600,2,'2023-02-15 03:10:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430660,6,'2023-02-15 03:11:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430720,5,'2023-02-15 03:12:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430780,3,'2023-02-15 03:13:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430840,1,'2023-02-15 03:14:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430900,2,'2023-02-15 03:15:00.000')