Hello,

After thinking I could use a simple .bat file to handle some transformation steps in my SSIS package, I have since learned that they do not work when running when running via an SQL Server Agent job. I have read that it may be due to permissions issues, and / or SSIS not allowing pop-ups. I thought I was being clever using a step to execute the .bat file, only to find out that the SQL Server Agent does not allow it.

With that being said, I have a high priority SSIS package that needs to do the following append as described in the .bat script.

copy \ServerName\Folder\ClientName\File1.txt + \ServerName\Folder\ClientName\File2.txt \ServerName\Folder\ClientName\NewFile.txt /b

\archive\reformat \ServerName\ClientName\NewFile.txt \ServerName\Folder\ClientName\BackupNewFile.txt -d

del \ServerName\Folder\ClientName\Elig\OriginalFile.txt

How would I do this via a Script Task?

From what I interpret from the .bat code, it combines two files into a new file, creates a backup of the combined file and then deletes another file.

Thanks in advance.

Could you explain it in plain English without the bat file.

I did explain it. The explanation is after the .bat file.

I think something along these lines will work - untested, but the idea should get you started. Note: File.Move moves the file to the new location and retains file settings - where File.Copy then a File.Delete will reset file settings. If the destination folder is compressed you want to perform a file copy and then delete the original file to have the 'archived' file pick up the compressed folder option.

public void Main()

{

bool fireAgain = true;

// Get the file names and paths

string firstFile = Dts.Variables["User::FirstFile"].Value.ToString();

string secondFile = Dts.Variables["User::SecondFile"].Value.ToString();

string newFileName = Dts.Variables["User::NewFileName"].Value.ToString();

string backupFileName = Dts.Variables["User::BackupFileName"].Value.ToString();

try

{

// Copy data from first and second file into new file

File.AppendAllText(newFileName, File.ReadAllText(firstFile));

File.AppendAllText(newFileName, File.ReadAllText(secondFile));

File.Move(newFileName, backupFileName);

Dts.Events.FireInformation(0, "File Task", "File Task", string.Empty, 0, ref fireAgain);

Dts.TaskResult = (int)ScriptResults.Success;

}

catch (Exception ex)

{

Dts.Events.FireError(0, "File Task", ex.ToString(), string.Empty, 0);

}

}

It isn't really clear from the batch file what the end result should be - the OriginalFile.txt isn't mentioned anywhere except the delete...

It then looks like 2 files are created - NewFile.txt and BackupNewFile.txt...

Since this is in SSIS, I have to wonder why you even need to combine the files. You can easily setup a process that performs a loop (foreach-object) over the list of files, processes them as needed, then archives each file individually.

Jeff,

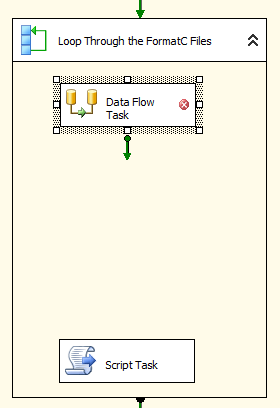

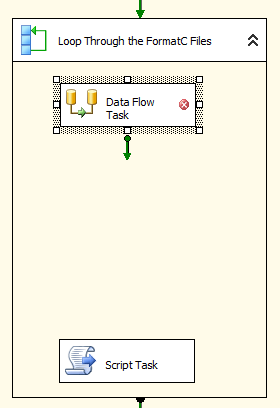

The foreach loop sounds like a great idea. I have started to set this up. So I have set the Collection settings tab in the foreach object to select the two individual files that I need to combine into the same TXT file. I understand that I need to now add a data flow step within the foreach loop. How would I program that piece to select the two files and append them together? I am guessing a flat file source and a flat file destination, but I am confused on the append portion.

Thank you so much for your assistance.

I think you missed the point - what are you doing with the files in SSIS in the first place? Are you loading these files into a database - or is this just a process of moving files?

What is the purpose of combining the files into a single file? Why is that necessary?

1 Like

The combined file then needs to be imported by another downstream package. The reason for the initial single files, is that they are created from different tables.

I think it would help to undersand what the requirement is in this whole process, what is the end goal. Maybe understanding the end game can helpnus recommend something that does not use bat at all

If the files have the same structure - which I assume they do because they are being combined - means you can create an import process to process that file type. No need to combine the files - this is where the for each loop will be utilized.

File1 is created from 'table1' as file1.txt - it is place in folder ..\ToBeProcessed... (whatever name you want)

File2 is created from 'table2' as file2.txt - it is also placed in the same folder.

The for each loop looks to your 'to be processed folder' and picks up all files that match the file specification (can be all .txt, or File.txt or any other filter). The file name is set to a user variable and passed to a data flow - the data flow uses a file connection to read the file and output the data to a destination.

After the data flow task - you can then add a script task or file system task (I prefer using a script task) that moves (use copy/delete if sending to a compressed folder) the processed file to an archive/backup location.

At the end of processing you now have 2 files in the backup/archive location and both files have been processed into the system.

All you need to do is move the 'downstream' packages logic into the data flow/for each loop.