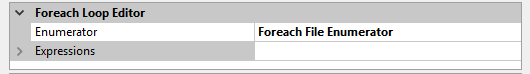

In the for each loop container I have the enumerator configuration set to the folder where the file sits. I have files set to "*.xls"

There is one expression in the foreach loop set up called "filenameretrieval" which has the variable @[User::xlFileName] in it, this file is set to blank in the variable definition. I'm not sure if this has to have a hard coded path to the destination, but does the folder path in the enumerator configuration not do that?

Variable mapping is set to index = 0

The problem seems to be with the excel connection manager,

Exception from HRESULT: 0xC020801C

Error at Package [Connection manager "Excel Connection Manager"]: SSIS Error Code DTS_E_OLEDBERROR. An OLE DB error has occurred. Error code: 0x80040E4D.

Error at Data Flow Task [Excel Source [2]]: SSIS Error Code DTS_E_CANNOTACQUIRECONNECTIONFROMCONNECTIONMANAGER. The AcquireConnection method call to the connection manager "Excel Connection Manager" failed with error code 0xC0202009. There may be error messages posted before this with more information on why the AcquireConnection method call failed.

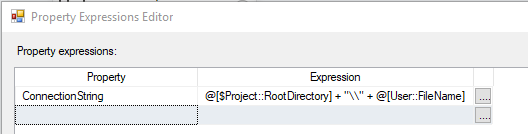

I'm trying to load the file name only but it seems the connection is looking for a path, every time I put the path in to the excel connection it disappears back to an empty path.

I've got delay validation set to true on all objects,

If I go into the Excel Source and click on Columns a message pops up at the bottom saying:

Exception from HRESULT: 0xC020801C

Error at Package [Connection manager "Excel Connection Manager"]: The connection string format is not valid. It must consist of one or more components of the form X=Y, separated by semicolons. This error occurs when a connection string with zero components is set on database connection manager.

Error at Package: The result of the expression "@[User::varSourceFolder]" on property "\Package.Connections[Excel Connection Manager].Properties[ConnectionString]" cannot be written to the property. The expression was evaluated, but cannot be set on the property.

Error at Package [Connection manager "Excel Connection Manager"]: SSIS Error Code DTS_E_OLEDBERROR. An OLE DB error has occurred. Error code: 0x80040E4D.

Error at Data Flow Task [Excel Source [2]]: SSIS Error Code DTS_E_CANNOTACQUIRECONNECTIONFROMCONNECTIONMANAGER. The AcquireConnection method call to the connection manager "Excel Connection Manager" failed with error code 0xC0202009. There may be error messages posted before this with more information on why the AcquireConnection method call failed.

I had this working before but nothing seems to work now. My thinking on this is that I need 2 variables with source and destination, and one extra variable with the file name, then use a combination of source + Filename for picking up the file, then dest + Filename to move file into the archive.

But I seem to need a full path to define the variable file name, rather than just name only, but this wont then concatenate onto the variable for the destination because it would be like concatenating 2 file paths.

G