Hi Team SQLTeam

We have a database that over the last week started getting consistency errors

After checking all hardware was okay ran a dbcc checkdb

And had a about 130 Consistency errors accross multiple tables

Loaded up the data in a test Database and ran the dbcc Check DB ('DatabaseName', Repair_Allow_Data_Loss') command and then tested the application, this seemed to work, reports that would previously not run, were working again and when running the dbcc checkdb it came up as zero allocation and zero consistency errors

As this had worked in test, got the okay to do the same on the production environment on the weekend.

Put the Prod DB in Single User Mode, ran the repair, did a checkdb and all came up clear.

Back in the office today, after spending a Sunday wondering what Monday was going to be like...

Recieved a few calls regarding SQL error messages from the application

SQL Error Code : 37000

Ran CheckDB again and note that there were errors in 2 tables [1 table had the same amount as before the repair and the other table had 6 error messages, pre repair it was at 62] Other tables that previously had errors were not present.

Error messages are to do with Consistency Errors and read as follows

BARTAG TABLE

Msg 2533, Level 16, State 1, Line 1

Table error: page (1:1307959) allocated to object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data) was not seen. The page may be invalid or may have an incorrect alloc unit ID in its header.

Msg 2533, Level 16, State 1, Line 1

Table error: page (1:1307960) allocated to object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data) was not seen. The page may be invalid or may have an incorrect alloc unit ID in its header.

Msg 2533, Level 16, State 1, Line 1

Table error: page (1:1307961) allocated to object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data) was not seen. The page may be invalid or may have an incorrect alloc unit ID in its header.

Msg 2533, Level 16, State 1, Line 1

Table error: page (1:1307962) allocated to object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data) was not seen. The page may be invalid or may have an incorrect alloc unit ID in its header.

Msg 2533, Level 16, State 1, Line 1

Table error: page (1:1307963) allocated to object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data) was not seen. The page may be invalid or may have an incorrect alloc unit ID in its header.

Msg 2533, Level 16, State 1, Line 1

Table error: page (1:1308036) allocated to object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data) was not seen. The page may be invalid or may have an incorrect alloc unit ID in its header.

Msg 2533, Level 16, State 1, Line 1

Table error: page (1:1308037) allocated to object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data) was not seen. The page may be invalid or may have an incorrect alloc unit ID in its header.

Msg 2533, Level 16, State 1, Line 1

Table error: page (1:1308038) allocated to object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data) was not seen. The page may be invalid or may have an incorrect alloc unit ID in its header.

Msg 8976, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Page (1:1307959) was not seen in the scan although its parent (1:1298359) and previous (1:1307958) refer to it. Check any previous errors.

Msg 8980, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Index node page (1:1298359), slot 290 refers to child page (1:1307960) and previous child (1:1307959), but they were not encountered.

Msg 8980, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Index node page (1:1298359), slot 291 refers to child page (1:1307961) and previous child (1:1307960), but they were not encountered.

Msg 8980, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Index node page (1:1298359), slot 292 refers to child page (1:1307962) and previous child (1:1307961), but they were not encountered.

Msg 8980, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Index node page (1:1298359), slot 293 refers to child page (1:1307963) and previous child (1:1307962), but they were not encountered.

Msg 8978, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Page (1:1307964) is missing a reference from previous page (1:1307963). Possible chain linkage problem.

Msg 8976, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Page (1:1308036) was not seen in the scan although its parent (1:1298359) and previous (1:1308035) refer to it. Check any previous errors.

Msg 8980, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Index node page (1:1298359), slot 367 refers to child page (1:1308037) and previous child (1:1308036), but they were not encountered.

Msg 8980, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Index node page (1:1298359), slot 368 refers to child page (1:1308038) and previous child (1:1308037), but they were not encountered.

Msg 8978, Level 16, State 1, Line 1

Table error: Object ID 2116918613, index ID 1, partition ID 72057620090847232, alloc unit ID 72057621783576576 (type In-row data). Page (1:1308039) is missing a reference from previous page (1:1308038). Possible chain linkage problem.

DBCC results for 'BARTAG'.

There are 925546 rows in 102961 pages for object "BARTAG".

CHECKTABLE found 0 allocation errors and 18 consistency errors in table 'BARTAG' (object ID 2116918613).

repair_allow_data_loss is the minimum repair level for the errors found by DBCC CHECKTABLE (DBNAME.dbo.BARTAG).

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

MVTSTK TABLE

Msg 8939, Level 16, State 98, Line 1

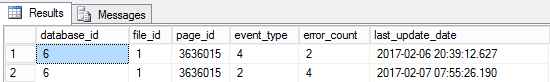

Table error: Object ID 70291310, index ID 4, partition ID 72057620278804480, alloc unit ID 72057621984509952 (type In-row data), page (1:3636015). Test (IS_OFF (BUF_IOERR, pBUF->bstat)) failed. Values are 133129 and -4.

Msg 8928, Level 16, State 1, Line 1

Object ID 70291310, index ID 4, partition ID 72057620278804480, alloc unit ID 72057621984509952 (type In-row data): Page (1:3636015) could not be processed. See other errors for details.

Msg 2533, Level 16, State 1, Line 1

Table error: page (1:3636016) allocated to object ID 70291310, index ID 4, partition ID 72057620278804480, alloc unit ID 72057621984509952 (type In-row data) was not seen. The page may be invalid or may have an incorrect alloc unit ID in its header.

Msg 8976, Level 16, State 1, Line 1

Table error: Object ID 70291310, index ID 4, partition ID 72057620278804480, alloc unit ID 72057621984509952 (type In-row data). Page (1:3636015) was not seen in the scan although its parent (1:3634670) and previous (1:3636014) refer to it. Check any previous errors.

Msg 8980, Level 16, State 1, Line 1

Table error: Object ID 70291310, index ID 4, partition ID 72057620278804480, alloc unit ID 72057621984509952 (type In-row data). Index node page (1:3634670), slot 8 refers to child page (1:3636016) and previous child (1:3636015), but they were not encountered.

Msg 8978, Level 16, State 1, Line 1

Table error: Object ID 70291310, index ID 4, partition ID 72057620278804480, alloc unit ID 72057621984509952 (type In-row data). Page (1:3636017) is missing a reference from previous page (1:3636016). Possible chain linkage problem.

DBCC results for 'MVTSTK'.

There are 9338432 rows in 491502 pages for object "MVTSTK".

CHECKTABLE found 0 allocation errors and 6 consistency errors in table 'MVTSTK' (object ID 70291310).

repair_allow_data_loss is the minimum repair level for the errors found by DBCC CHECKTABLE (NEUMANN.dbo.MVTSTK).

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

I read a bit of information regarding the error messages online, but have essentially become overwhelmed with the amount of information available.

Essentially a greenhorn in the world of SQL, inherited the Database as part of the multifaceted role that I am in

Has been running for a year without any SQL errors such as the above, and a bit lost on what to do.

As the initial repair has not worked that well, do not want to keep doing repair_allow_data_loss as a bandaid for the rest of the life of the DB, has also been a lot of late nights troubleshooting and reading and trying to get my head around the issue.

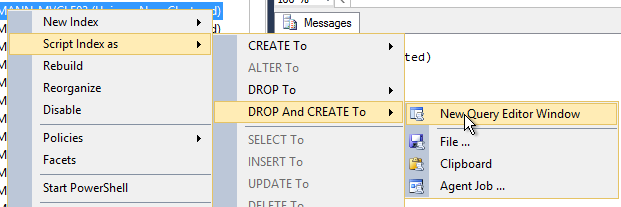

One site recommended repairing the index on the INDEX ID mentioned in the error message, tried this, and had no luck

I restored the Database [To a Test Instance] that was made an hour after the repair_allow_data_loss and ran checkdb again and noted that the errors were there.

Did i mentioned that immediately after the repair i ran a checkdb and got no errors.

Hoping to be able to fix up what we have, as production DB runs 24/6.5 so not wanting to inform management that we might have to go back in time almost 10 days or more!

One other thing to note

Ran the command DBCC CHECKDB ([TESTINGDB]) WITH No_INFOMSGS, ALL_ERRORMSGS on the test database that was repaired and which has been used for training since being repaired and got no error messages.

All up confused, overwhelmed and would appreciate if someone could assist with this issue, or point me in the right direction

Kind Regards

Dan

NONE

NONE